Ever since the launch of the Denodo AI SDK, we have been overwhelmed and pleasantly surprised by the strong interest and rapid adoption by our customers. This validates our belief that a robust data foundation is critical for AI success, and that a logical approach to data management and delivery is the simplest, most scalable way to develop RAG-based applications on top of disparate, siloed corporate data repositories. But we continue to be at the forefront of AI development, and it’s fair to say that all paths lead to agentic AI.

While this is likely to be a long journey with plenty of bumps along the way, it promises to drive more transformational change. In this post, I want to share our perspective on what customers need to focus on with agentic AI and how we can help our customers on their journey.

Pick Your Brain Wisely

As I discussed in a recent LinkedIn post, while the power and value of agents come from their modularity and flexibility, the most critical component is the brain, or the large language model (LLM). Selecting the right model in the era of agents is becoming increasingly important, since in agentic AI models are not only responsible for responding but also for overall reasoning and planning. The choice of model can literally make or break your AI agent implementations (and I have seen plenty of broken AI agents).

At Denodo, we recognize the importance of LLM selection, so we want our customers to take full advantage of the rapid innovations in this space. This is why, unlike many other solution vendors, we provide customers with tremendous choice and flexibility when it comes to model selection.

With the latest version of the Denodo AI SDK, we have expanded our already extensive model integration support in terms of both LLM providers and model types. The Denodo AI SDK now supports innovative LLM providers such as SambaNova and new reasoning models such as DeepSeek R1 and OpenAI “o” series models. We believe that it is crucial to select the right model for the right task for the right agent, especially as we expand into the realm of agentic workflows based on multi-step plans.

Improving Accuracy and Relevance

To succeed, enterprise AI agents need to provide accurate, relevant responses. However, this is easier said than done, as data agents often need access to real-time data, and the answers often need to be exact.

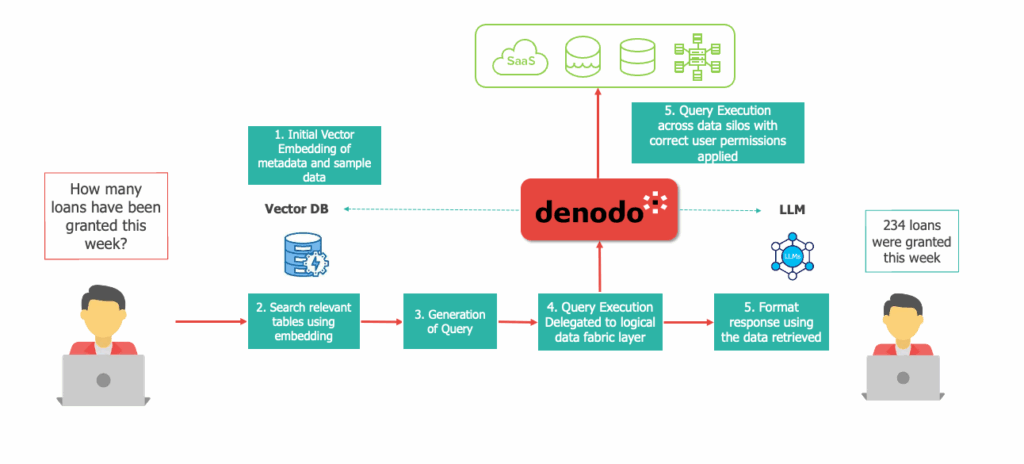

We continue to believe that the Query RAG pattern, coupled with a rich semantic layer, goes a long way to enabling the needed accuracy and relevance for enterprise deployment of AI applications. We are laser-focused on the need to improve accuracy, and we continue to move the needle in this space in other ways.

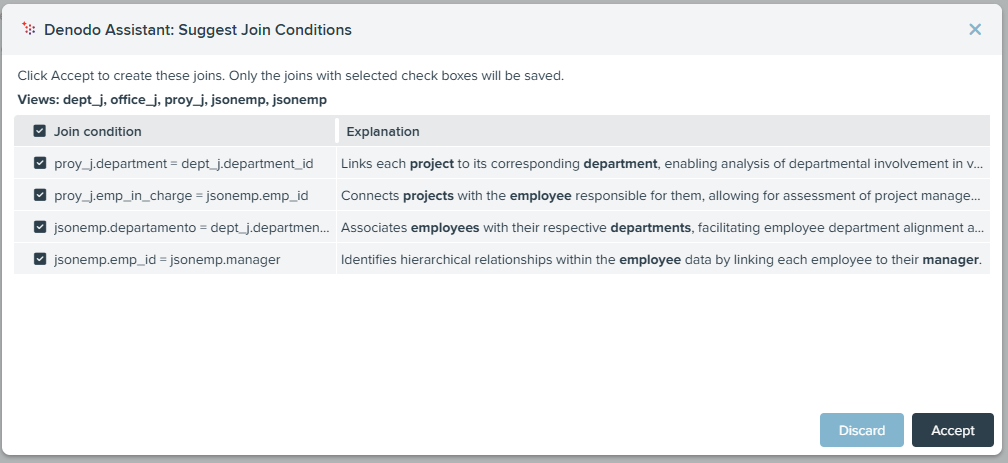

With the recent release of Denodo Platform 9.2, we have expanded the capabilities of Denodo Assistant to help simplify and automate the process of enriching the semantic layer. Denodo Assistant can now utilize AI to automatically suggest join conditions during the data model development process. Similar to GraphRAG, the relationships and Associations built within the Denodo Platform’s semantic layer provide LLMs with valuable, rich context, and we want to accelerate and simplify how these are created within the Denodo Platform.

In addition, we have added custom instructions support for the Denodo AI SDK, which enables our customers to inject company- or domain-specific context in the prompt construction pipeline. This will help create more accurate, relevant responses, enabling more targeted applications and deployments of the Denodo AI SDK. Prompt customization and engineering are becoming a team effort, and we want to involve our customers more, providing them with more options for prompt-construction, which will ultimately help improve the relevance of AI responses to targeted users.

Agentic Integration with MCP

Our strategic focus on openness and industry standards extends to the area of agentic integration. This is why we are very excited about the introduction of Model Context Protocol (MCP). Anthropic’s introduction of the MCP standard offers a standardized, flexible way to provide LLMs with more context and access to tools. It also establishes a standard communication protocol for multi-agent integration and collaboration.

We have always taken an open approach with the Denodo AI SDK, and have had built-in support for REST API integration from day one. We believe that the addition of MCP support enables a more powerful, flexible, abstraction-integration layer. We have now included an MCP server implementation with the latest version of the Denodo AI SDK. This means the Denodo AI SDK and chatbot can be integrated with any MCP-compliant client, opening up a whole world of possibilities for our customers.

We plan to further expand our MCP support as the standard evolves—particularly in areas like security, authentication, and access control. We believe MCP and similar standards will be foundational in enabling a fully agentic ecosystem, in which AI agents can collaborate and communicate autonomously within trusted enterprise environments. By supporting these standards early, Denodo aims to give customers a head start in building robust, future-proof AI ecosystems based on openness and interoperability.

The Agentic Future

At Denodo, we are deeply committed to enabling the next phase of enterprise AI. From model flexibility to prompt customization, from semantic enrichment to agentic integration, our goal is to provide customers with the tools they need to build powerful, trustworthy AI agents. As the landscape shifts from chatbots to agents, we invite our customers to take this journey with us—pushing boundaries, embracing standards, and unlocking the full potential of agentic AI.

- Build AI-Ready Data Products with Denodo - October 16, 2025

- From Chatbots to Agents: Navigating the Future of AI - July 3, 2025

- Query RAG – A New Way to Ground LLMs with Facts and create Powerful Data Agents - December 16, 2024