When using Data Virtualization as your Enterprise data layer there are certain security aspects that need to be taken into account so the access to the virtual views and the connected data sources is secured.

This post will describe the different features and considerations that a Data Virtualization Platform should provide in order to keep your Enterprise Data Layer not only secured but also controlled by different auditing and monitoring options.

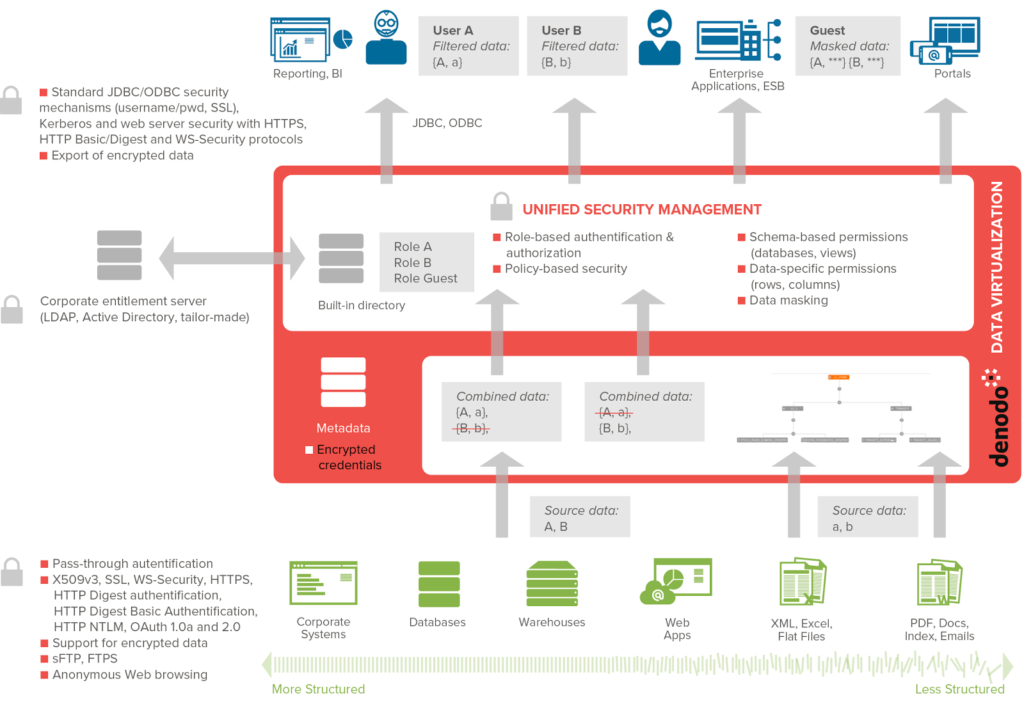

When your Enterprise Data Layer uses Data Virtualization the architecture will look like the one in the following diagram, where your Data Virtualization Platform is located between your data sources and your consuming applications:

Taking into account this architecture, there are two points where an authentication process happens in your Enterprise Data Layer. The first one, southbound, is the authentication that happens between the DV server and the data sources connected to it. The second one, northbound, is the one that happens between the consuming applications and the Data Virtualization Server.

Southbound

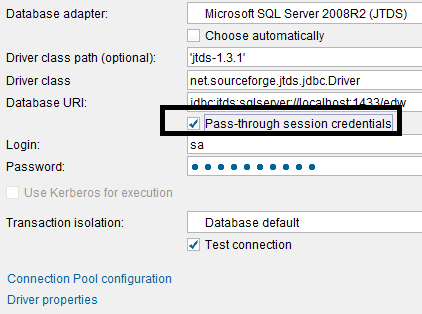

The first step that you will have to do for accessing your data sources will be to configure the connection parameters including the authentication type that will be used.

In order to access the data sources it is common to configure a system account in your data source that will be used by the Data Virtualization server. Every query, no matter what user executes the query, will always use this system account for connecting to the data source. That means that in your data source logs, the user logged will be the system account. For some data sources it is possible to configure a different user than the one opening the connection for logging purposes, e.g. in Oracle it is possible to use the variable “v$session.osuser” and this option should be leveraged by your Data Virtualization platform for a more detailed logging. That will allow you to write in your data source logs which user is accessing your Enterprise Data Layer.

In other scenarios you may want to leverage your current LDAP or Active Directory (AD) credentials so you can take advantage of any security restriction already in place in your data source. For that reason some type of Pass-through authentication and Kerberos options should be available in your Data Virtualization Platform of choice.

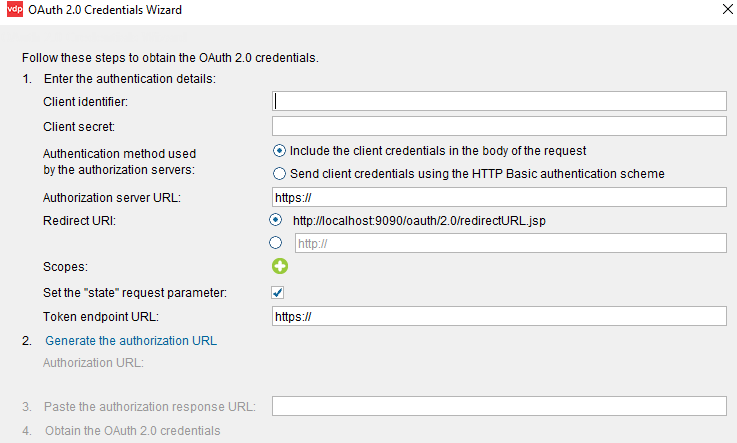

As your Enterprise Data Layer should give you access not only to your standard data sources such as databases but also to any of the new Cloud, Big Data, etc. you need to be sure that your Data Virtualization provider is up to speed on the types of authentication methods available. For example it is common that SaaS (Software as a Service) API’s, provide web service access with OAuth authentication that require different steps in order to obtain the authentication tokens. If your Data Virtualization GUI provides a wizard to facilitate this process then you have one less step to worry about and to maintain.

Northbound

This is the authentication that happens at the Data Virtualization level, when trying to access to your Enterprise data layer from a client application, for example a reporting tool, data service or an administration tool.

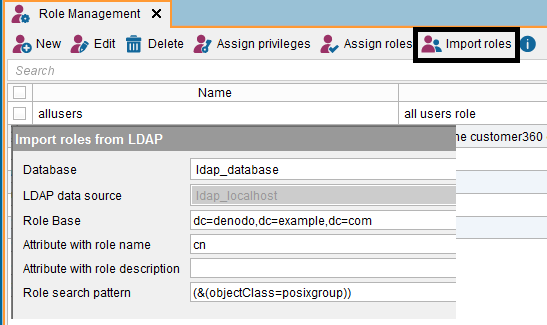

The first thing that you have to define at this point are users/roles for assigning privileges to. For this task, your Data Virtualization server should give you multiple options such as creating new users and roles that will be self-stored or leveraging your current user credentials and role/group organization available in your LDAP or AD server. Using the second option you don’t need to worry about synchronizing your credentials or groups as the authentication and group validation will happen on the fly directly from your LDAP/AD Server. By using this approach you also ensure that your current security policies such as password expiration or password constrains will be enforced as the LDAP/AD server will continue its current job of managing those.

Your Data Virtualization Platform should also provide Kerberos support to allow features such as SmartCard access or Single Sign-On when authenticating to your Enterprise Data Layer.

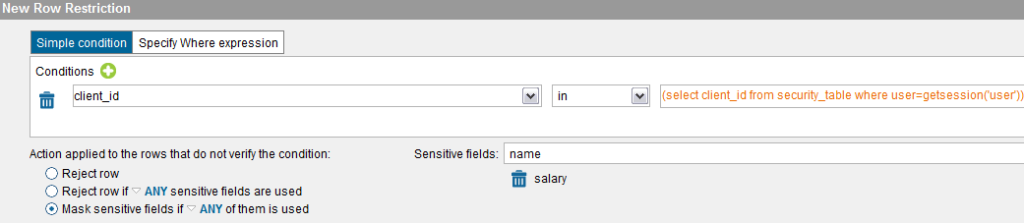

Once we have users and roles available it is a matter of providing privileges to those. At this level your Data Virtualization Platform should give you the most fine-grained security as possible so users of your Enterprise Data Layer can only see the data they are entitled for. That means not only schema-wide permissions (e.g., to access virtual databases and views) but also data-specific permissions (e.g., to access the specific rows or columns in a virtual view). That will give your Enterprise Data Layer the option to configure very fine-grained access up to the cell level (applying both row-based and column-based security) including the possibility of masking specific cells (e.g., managers are not allowed to view the “salary” column of higher-level management, so those cells would appear masked in the results).

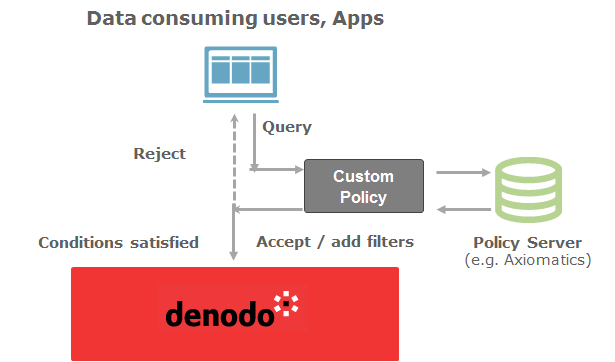

In some scenarios you may be using a policy or entitlement system that you want to leverage or integrate in your Enterprise Data Layer, for that reason advanced Data Virtualization solutions should make available extension points such as Custom policies to allow that integration. Similar concepts already exist in some systems such as Oracle (Virtual Private Database Policies). Those policies will facilitate the integration with the third party system and:

- Allow / Reject the query

- Add a filtering condition based on the restrictions returned by the policy server

Data In Motion

Both Northbound and Southbound communications for data in motion should be secured. Typically this is established via SSL connections between the consumer and the Enterprise Data Layer and by the specific data source security protocol between the Data Virtualization Platform and the data sources (e.g., SSL, HTTPs, sFTP, etc.).

Data at rest

When using Data virtualization in your Enterprise Data Layer, the data will never be stored as the queries are sent to the data sources in real time and streamed back to the client application. Advanced Data Virtualization Platforms will provide some type of cache system to increase the performance or swapping mechanism into disk to avoid memory issue. In both scenarios data will be stored, for a period of time in the case of the cache and for the duration of the query in the case of the swapping. This information will be secured too by transparently leveraging any encryption mechanism available in the selected Cache System (e.g. Oracle Transparent Data Encryption) or by using your OS encrypting options in your folder for swapping data.

Auditing and monitoring

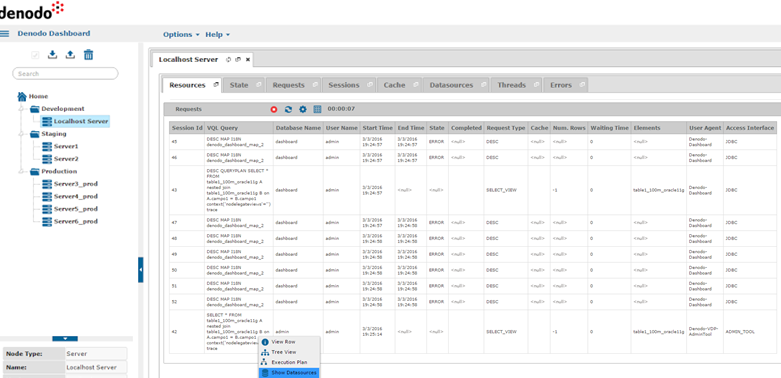

An important part of your security strategy is being able to audit and monitor every data access. By using Data Virtualization as your Enterprise Data Layer you should be able to check at any time who has accessed to which resources, what changes or what queries have been executed and when it happened.

Those actions should not only be logged to log files or to an auditing database but also through standards such as SNMP, JMX or WS-Management. That will give you the ability to integrate your Enterprise Data Layer with with System Management packages such as HP-OpenView, Splunk, Nagios, Osmius, IBM Tivoli and Microsoft WinRM, etc.

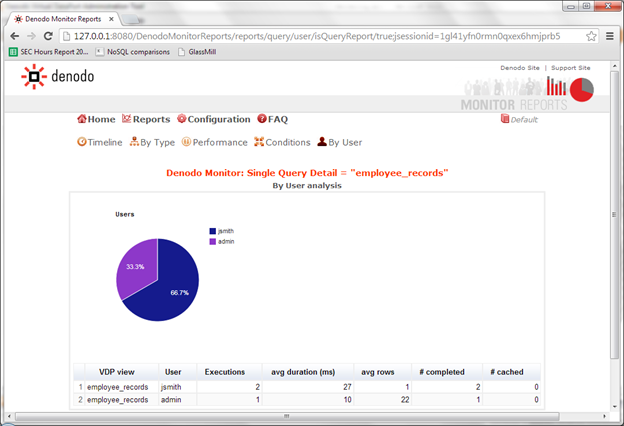

An advanced Data Virtualization Platform should also provide its own monitoring and auditing dashboard, preferable as a web so it can be accessed from a browser without the need of installing any standalone tool.

That dashboard should give you not only real time information about the sessions or the resources that are being accessed but also reports to help you understand if any adjustment or policy change needs to be applied.

Conclusions

A Data Virtualization Platform should include low granularity security options to add to your Enterprise Data Layer so access is secured and it can be audited at any point. Selecting the Denodo Platform your Data Virtualization Platform will add the following features to your Enterprise Data Layer:

- Unified Security Management offering a single point to control the access to any piece of information.

- Role based security access with a low granularity level. It is possible to assign privileges to a schema level, element, column, row or even adding column masking to certain values based on filters.

- Authentication credentials can be stored either internally in Denodo Platform in a built-in repository or externally in a corporate entitlements server such as an LDAP / AD repository. Also it is possible to use ‘custom policies’ to connect to any other corporate security system

- Standard Security protocols used to connect to data sources (X509v3, SSL, WS-Security, OAuth…) or to publish data to consuming applications (SSL, HTTPS, Kerberos…).

- The Denodo Platform provides an audit trail of all the information about the queries and other actions executed on the system. Denodo will generate an event for each executed sentence that causes any change in the Denodo Catalog (store of all the server metadata). With this information it is possible to check who has accessed specific resources, what changes have been made or what queries have been executed.

A detailed description of this capabilities can be found in this article located in the Denodo Community

What other security features do you think are needed in your Enterprise Data Layer? Are you currently using Data Virtualization to enhance the security of your Enterprise Data Layer?

- Enhancing the Security of your Enterprise Data Layer - December 21, 2016

- Metadata and Data Governance for your Enterprise Data Layer - October 5, 2016

- Managing Data Virtualization Deployments - March 31, 2016