Data virtualization deployments can be small with a few developers and hundreds of queries a day or large with hundreds of developers, thousands of queries and multiple environments to monitor and manage. When working on those large deployments we will face multiple challenges such as:

- Having multiple development teams modifying views at the same time.

- Maintaining the metadata for multiple environments like development, testing, staging or production. Each environment will probably have dependent parameters such as the connection url or the credentials that should be taken into account to avoid multiple copies of the same metadata.

- Migrating metadata from one environment to another maintaining the consistency and ensuring the quality of the views.

- Managing the system resources to avoid that the number of users or queries monopolize any of our data sources connections, server memory or CPU usage.

- Monitoring all the real time and historical information from our servers or environments without the need of accessing multiple systems, tools or distributed log files.

The Denodo Platform is designed for dealing with both small and large deployments and provides multiple key features to help overcome those common challenges. Those features include:

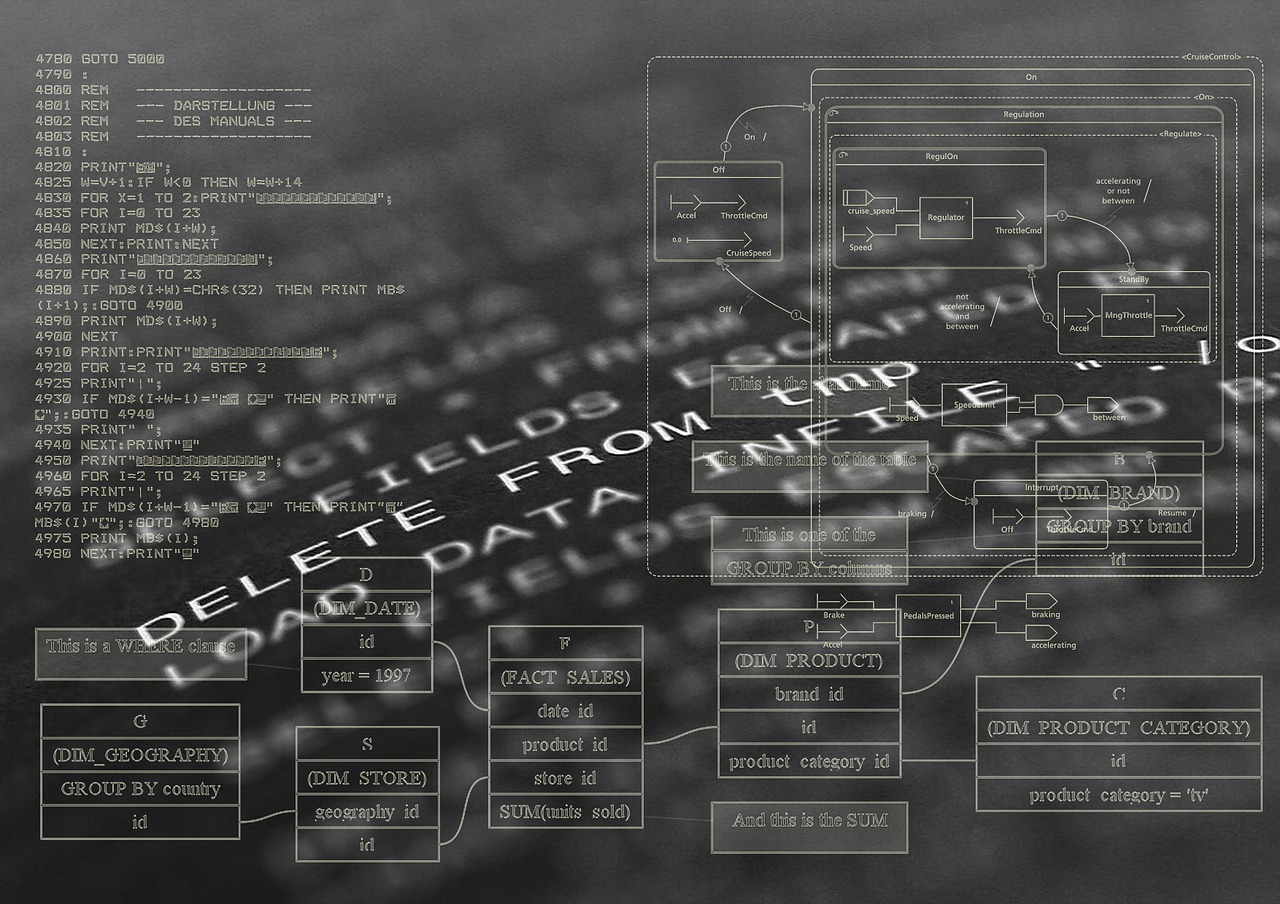

Graphical integration with Version Control Systems

Denodo can integrate with any of the most widely used version control system, Git, Subversion, Microsoft TFS. Enabling this feature allow us to have multiple development teams working in parallel by synchronizing their local changes with a consolidated version. The check in, check out and diff operations became graphically available from the Denodo Administration Tool and include dependency management, conflicts control and color flags to easily identify synchronized or out of synch elements.

Dependent parameters organized by environments

Environments can represent, for example, different development servers for different groups and/or different geographical locations. This feature enables an easy way to manage dependent metadata such as the data sources connection properties (e.g. login/passwords) as each environment can have its own dependent parameters without the need of replicating the metadata e.g. having the same elements such as data source connections just modifying the server urls. This feature also makes the metadata load easier as when migrating to a different environment, the process can be configured to use the properties of the target environment instead of the ones from the origin.

Easy to use graphical interface or automated migration process based on scripts

When executing a migration process between environments Denodo provides different options to fit our needs or enterprise policies. The migration can be performed:

- Directly from the Denodo Administration Tool interface as it provides Import capabilities not only for one server but to a list of them including management of the dependent parameters explained above.

- Using the Denodo Replicator, a graphical tool that is used for performing migrations and that provides support for defining environments to synchronize the metadata between all the different members based on a master node.

- Creating an automated process using the export/import scripts that Denodo provides for this purpose. This automated process can be defined in combination with the Denodo Testing Tool.

Configurable testing tool for validating the metadata loaded in the different environments

The Denodo Testing Tool allows Denodo users to easily automate the testing of their data virtualization scenarios by configuring their suite of tests in text files with no programming needed. We can easily combine this Tool with the migration scripts and generate our own automated process. The steps could be something like:

- Obtain the consolidated version (VCS) from the development server.

- Load that version into the staging server but using the environment dependent parameters file.

- Execute the Denodo Testing Tool group of tests.

- In case of error inform of tests that failed.

- In other case tag the version of the metadata.

A Resource manager integrated in the Administration Tool to control the system resources with a big level of granularity

From the Resource Manager we can ensure fair distribution of resources among applications / users. There are multiple criteria that can be used to allocate the available resources for example user/role, application name, time, access interface… Once the criteria is defined we can assign different restrictions to it such as changing the execution priorities, the number of concurrent queries, limiting the execution time or number or rows that will be returned… An example can be:

For all the users that belong to the role “Public”, when the system CPU usage is > 80% limit the query execution to a shorter timeout. That will ensure that other users that belong to the role “Analyst” or “Manager” have enough resources to execute their current queries.

Single interface for Monitoring and diagnosing information

The Denodo Monitoring and Diagnosing feature can be accessed from a browser without the need of installing any extra application. From this interface is it possible to configure a set of environments or servers so all the information for each node, not only in real time but also historical, is available from a single point of access. The information include the current sessions, queries, connections, cache load processes… but it also includes a capability to load all the information available at a certain period of time. That capability will help the administrator understand all the server parameters during the time of an issue, for example what queries were being executed, the sources used by those queries, the connection pool status for those sources and so on, simplifying that way the diagnosis process and helping on taking the proper actions faster.

All those features are now included as part of the Fast Data Strategy Virtual Summit. You can all watch my session here: Data Virtualization Deployments: How to Manage Very Large Deployments.

- Enhancing the Security of your Enterprise Data Layer - December 21, 2016

- Metadata and Data Governance for your Enterprise Data Layer - October 5, 2016

- Managing Data Virtualization Deployments - March 31, 2016